Checkout Extensibility upgrade – July 2024

Leave a CommentExecutive Summary:

Shopify are deprecating the checkout.liquid theme file for the Information, Shipping and Payment pages on 13th August 2024 (with other areas to follow in August 2025)

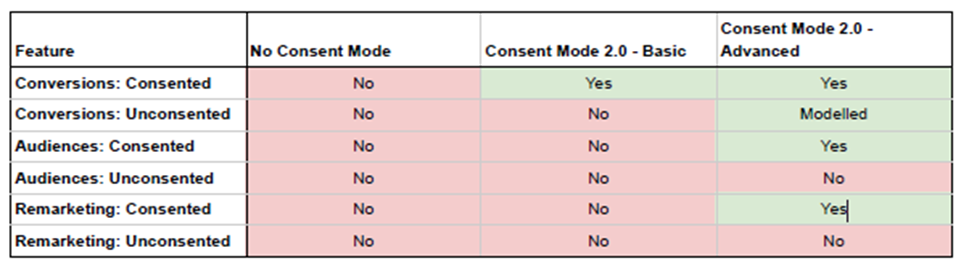

Existing customisations and integrated checkout tracking on these pages will no longer function after this date. This will not impact how shopify works, but any additional third party tracking and how that data is collected will be affected. When a user rejects marketing or analytics cookies, Shopify will not fire any tracking in the checkout, whether it sets cookies or not. This has serious impacts on any required tracking or tools such as Google Ads that use cookieless pings to model key metrics back into the tool.

It’s imperative you review your setup ASAP

What is the announcement?

August 13, 2024 is the deadline to upgrade from checkout.liquid to Checkout Extensibility for the Information, Shipping, and Payment pages. Checkout.liquid is deprecated and has not been updated with new functionality since 2021. With ever changing regulations worldwide, including the introduction of card industry rules under PCI DSS v4, checkout.liquid is less secure than Checkout Extensibility, and they will discontinue its support.

Checkout extensibility overview

Why is checkout.liquid being deprecated?

Customising the Shopify checkout via checkout.liquid is typically complex and time-intensive, requiring advanced coding knowledge. Checkout.liquid customisations are often impacted by upgrades and, in some cases, result in poor checkout performance and a substandard buyer experience. They can also be problematic for security reasons.

One other additional factor to this change was in August 2022, when a conflict with Google Tag Manager in the theme code caused a 12 hour Shopify checkout global outage.

Shopify has been seeking to ensure checkout stability and performance whilst enabling the meaningful addition of new capabilities and functionality. As such, it has invested in Checkout Extensibility, a suite of apps and tools that make it easier to customise the Shopify checkout and build bespoke checkout experiences. Checkout Extensibility is secure, code-free, app-based, upgrade-safe and higher-converting. It also integrates with Shop Pay, which means, for the first time ever, express checkout can be customised.

Crucially, Checkout Extensibility replaces the need for checkout.liquid.

How will checkout extensibility impact your tracking?

Despite Shopify positioning this as ‘no change’ to how tracking will work it severely impacts your ability to pass data into all media tracking e.g Google Ads, Meta and GA4)

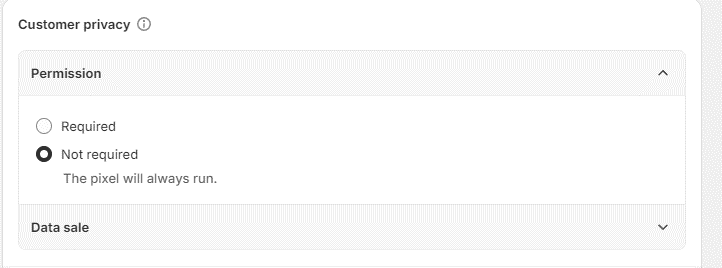

The guidance and the Shopify interface says that your pixels (where tracking scripts sit in Checkout Extensibility) will always run. (see below screen grab).

However, this is incorrect as your tracking script will not run if a user rejects either marketing or analytics cookies. Blocking any tracking when a user rejects these cookies prevents technology such as cookieless pings, these are used by Google tools to model key metrics when a user opts out of cookies. This is a global issue and will continue to impact tracking for all people who’ve upgraded to checkout extensibility.

Why are shopify doing this with tracking?

It is unknown why they aren’t allowing any cookieless tracking to fire from their platform. However, Shopify is moving to Checkout Extensibility for several reasons:

Enhanced Security: The new framework is designed with better security features to protect both merchants and customers.

Scalability: Checkout Extensibility allows for a more scalable solution, accommodating growing businesses and increased traffic more effectively.

Improved Customization: The new system offers more robust and flexible customization options, enabling developers to create more sophisticated and tailored checkout experiences.

Future-Proofing: By adopting modern technology standards, Shopify aims to future-proof its platform, ensuring it remains relevant and capable of supporting new features and improvements.

What Does It Mean for You?

You will need to review the following:

Action Required: You need to migrate your checkout customizations from checkout.liquid to the new Checkout Extensibility framework before the August 13, 2024 deadline.

Learning Curve: There will be a learning curve as you familiarize yourself with the new framework and its capabilities.

Opportunities for Improvement: The new system provides opportunities to enhance and innovate your checkout process, potentially improving customer experience and conversion rates.

Support and Resources: Shopify will likely offer support and resources to assist with the transition, including documentation, tutorials, and possibly migration tools.

You’ll also need to QA your existing marketing tags to ensure everything works as expected.

What to do next?

- Audit how your tags are implemented on checkout extensibility

- Review turning off the privacy API and using an alternative solution to continue to use cookieless pings for Google Marketing Platform

- Review using OneTrust (or similar) alongside GTM to ensure this works as expected

If you’re unsure of your current status or how to complete these steps feel free to email data@fabric-analytics.com and we can help.

How can Fabric help?

At Fabric Analytics we can provide different levels of support to ensure you’re fully ready for these new changes.

As we work on a transparent hourly based model, reach out to us and we can support with any of your needs.

If you need support reviewing your third party tracking from a custom checkout.liquid instance to Checkout Extensibility, please contact us to find out more about how our team of Experts can help you.

As one of the most experienced tracking and data agencies in the ecosystem, with a first-class team of experts and developers, we are perfectly positioned to consult on your bespoke requirements and implement these.

Developer Resources

Here are a list of Shopify web URLs that verify the details about the transition from checkout.liquid to Checkout Extensibility:

- Shopify Developer Documentation: Checkout Extensibility

- Shopify Community: Checkout Extensibility Announcement

- Shopify Help Center: Migrating from checkout.liquid

- Shopify Plus Blog: Secure and Scalable Checkout

- Shopify Developers Blog: Customizing Checkout

These URLs provide verification and further details about the transition, including the reasons behind it, the impacts of missing the deadline, and the benefits of the new Checkout Extensibility framework.