Google’s New Tools: Refining First-Party Data Strategies and Their Impact on GA4

Leave a CommentGoogle’s recent updates—Tag Diagnostics and a streamlined Consent Management Setup—aim to bolster first-party data strategies across Google Ads, Google Analytics (GA4), and Google Tag Manager (GTM). While these tools bring significant improvements, there are some nuances and challenges to consider, especially for those who have been working with Tag Diagnostics for a while and are familiar with the complexities of setting up Consent Mode. Let’s explore these tools’ practical implications and how they affect your GA4 implementation.

Tag Diagnostics: A Mixed Bag of Benefits and Limitations

Tag Diagnostics isn’t entirely new; many users have been familiar with it for several weeks or even months, particularly those who first encountered it in Google Tag Manager (GTM) before seeing it in GA4. The tool’s primary function is to flag potential issues in your tagging setup, such as missing tags or misconfigured events. However, while the concept is solid, the execution hasn’t been flawless.

- Accuracy Issues: One common experience with Tag Diagnostics is its occasional inaccuracy. For example, it might flag certain pages as missing GTM, only for users to find that GTM is indeed present when they manually check. These false positives can be frustrating and may lead to unnecessary troubleshooting, which is a drawback for businesses relying on precise data to inform their strategies.

- Ongoing Value: Despite these imperfections, Tag Diagnostics still offers value by providing a centralised view of your tagging health. For GA4 users, it can serve as a helpful tool to ensure that most tagging issues are caught early, even if it occasionally requires manual verification. As Google continues to refine this tool, we can expect improvements in its accuracy and reliability.

Consent Management Setup: Streamlining a Critical Process

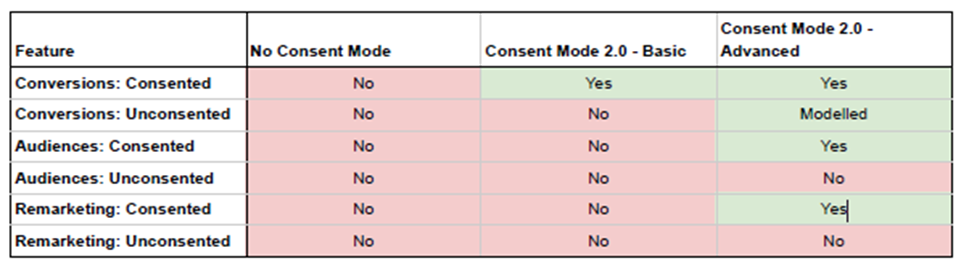

The new Consent Management Setup is a welcome addition, especially for businesses that need to navigate the complex landscape of data privacy regulations like GDPR and CCPA. While many companies have developed robust processes for setting up Consent Mode, this tool aims to make the process even more accessible and less technically demanding.

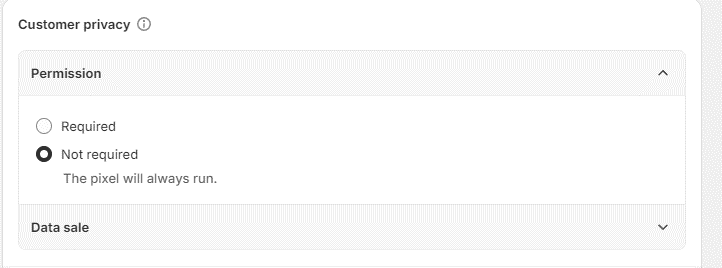

- Easing the Setup Process: Even for those with established processes, having a more integrated and user-friendly setup within GA4 and GTM is beneficial. The streamlined approach reduces the complexity of deploying consent banners and managing consent mode, which can save time and reduce the likelihood of errors.

- Limited CMP Integration: A notable limitation is the current integration with only three Consent Management Platforms (CMPs): Cookiebot by Usercentrics, iubenda, and Usercentrics. While these are among the more popular CMPs, the narrow selection might be disappointing for businesses using other platforms. However, since you’ve worked with all three CMPs, this integration should fit seamlessly into your existing workflows. There’s hope that Google will expand this list over time, offering greater flexibility and choice for businesses.

The Impact on GA4 Implementation

For GA4 users, these tools are important steps forward, though they come with caveats:

- Enhanced, But Imperfect, Data Accuracy:

- Tag Diagnostics plays a crucial role in maintaining the integrity of your GA4 data, even if it sometimes requires manual checks to confirm its alerts. The tool can help you catch most issues before they affect your data quality, which is essential for making accurate, data-driven decisions.

- Tag Diagnostics plays a crucial role in maintaining the integrity of your GA4 data, even if it sometimes requires manual checks to confirm its alerts. The tool can help you catch most issues before they affect your data quality, which is essential for making accurate, data-driven decisions.

- Simplified Compliance, with Room to Grow:

- The Consent Management Setup should make it easier to maintain compliance with privacy regulations, reducing the technical burden on your team. While the current CMP options are limited, they include some of the most widely used platforms, which should cover the needs of many businesses. For GA4 users, this means you can implement and manage consent more efficiently, ensuring that your data collection remains both compliant and effective.

- The Consent Management Setup should make it easier to maintain compliance with privacy regulations, reducing the technical burden on your team. While the current CMP options are limited, they include some of the most widely used platforms, which should cover the needs of many businesses. For GA4 users, this means you can implement and manage consent more efficiently, ensuring that your data collection remains both compliant and effective.

- Looking Ahead:

- As Google continues to refine these tools, it’s likely that we’ll see improvements in accuracy for Tag Diagnostics and an expanded list of CMP integrations for Consent Management Setup. Staying up to date with these developments will be key to maximising the benefits for your GA4 setup.

Google’s new tools, while promising, are not without their challenges. Tag Diagnostics offers a useful, if occasionally imperfect, way to ensure your tagging is accurate across GA4 and GTM. Meanwhile, the Consent Management Setup simplifies the complex task of managing user consent, though its utility is somewhat limited by the current number of integrated CMPs.

For businesses already working within GA4, these tools provide valuable enhancements, even if they require some manual oversight or adaptation. As Google refines these features, the hope is that they will become even more robust, offering greater accuracy and flexibility in managing first-party data strategies.